Integrating new knowledge without catastrophic interference: computational and theoretical investigations in a hierarchically structured environment

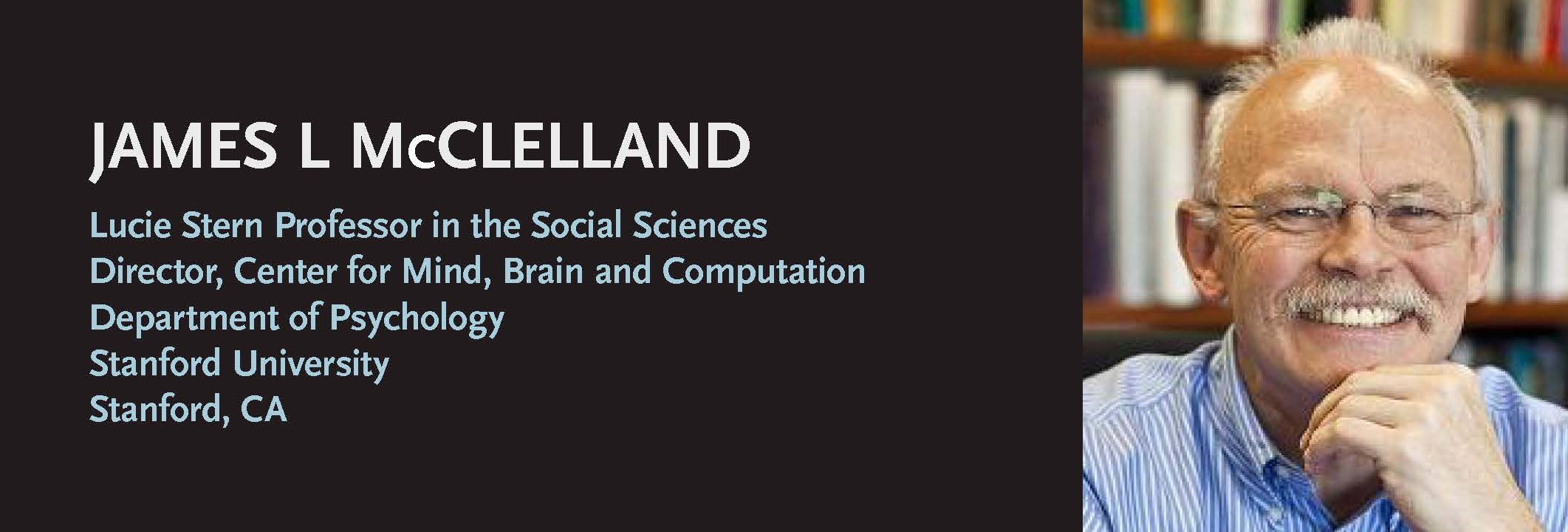

June 30, 2020 | 18:00 CET | ZOOM ID: 7754910236Director, Center for Mind, Brain and Computation, Dept. Psychology Stanford University, Stanford CA, USA

According to complementary learning systems theory, integrating new memories into a multilayer neural network without interfering with what is already known depends on interleaving presentation of the new memories with ongoing presentations of items previously learned. I use deep linear neural networks in hierarchically structured environments previously analyzed by Saxe, McClelland, and Ganguli (SMG) to gain new insights into this process. For the environment I will consider in this talk, its content can be described by the singular value decomposition (SVD) of the environment’s input-output covariance matrix, in which each successive dimension corresponds to categorical split in the hierarchical environment. Prior work showed that deep linear networks are sufficient to learn the content of the environment, and they do so in a stageline way, with each dimension strength rising from near-zero to its maximum strength after a delay inversely proportional to the strength of the dimension, as previously demonstrated by Saxe et al.

Several observations are then accessible when we consider learning a new item previously not encountered in the microenvironment. (1) The item can be examined in terms of its projection onto the existing structure, and whether it adds a new categorical split. (2) To the extent the item projects onto existing structure, including it in the training corpus leads to the rapid adjustment of the representation of the categories involved, and effectively no adjustment occurs to categories onto which the new item does not project at all. (3) Learning a new split is slow, and its learning dynamics show the same delayed rise to maximum that depends on the dimension’s strength. These observations then motivate the development of a similarity-weighted interleaved learning scheme in which only items similar to the to-be-learned new item need be presented to avoid catastrophic interference.

McClelland, J. L., McNaughton, B. L., & Lampinen, A. K. (2020). Integration of New Information in memory: New insights from a complementary learning systems perspective. Philos Trans R Soc B. 375: 20190637.

Saxe, A. M., McClelland, J. L., & Ganguli, S. (2019). A mathematical theory of semantic development in deep neural networks. PNAS. 116(23), 11537-11546.